# Q: 我要运行CDH515大数据环境组件,且带kerberos认证需要怎么做?

# A: 需要分别修改DI server和调度引擎Dolphinscheduler来适配kerberos认证:

# 一、DI Server适配CDH515及kerberos认证

# 1. Hadoop适配

将CDH515对应的服务配置文件放置于${DI_INSTALL_HOME}/diserver/plugins/pentaho-big-data-plugin/hadoop-configurations/cdh515/目录下(服务配置文件请联系对应的服务提供商获取)

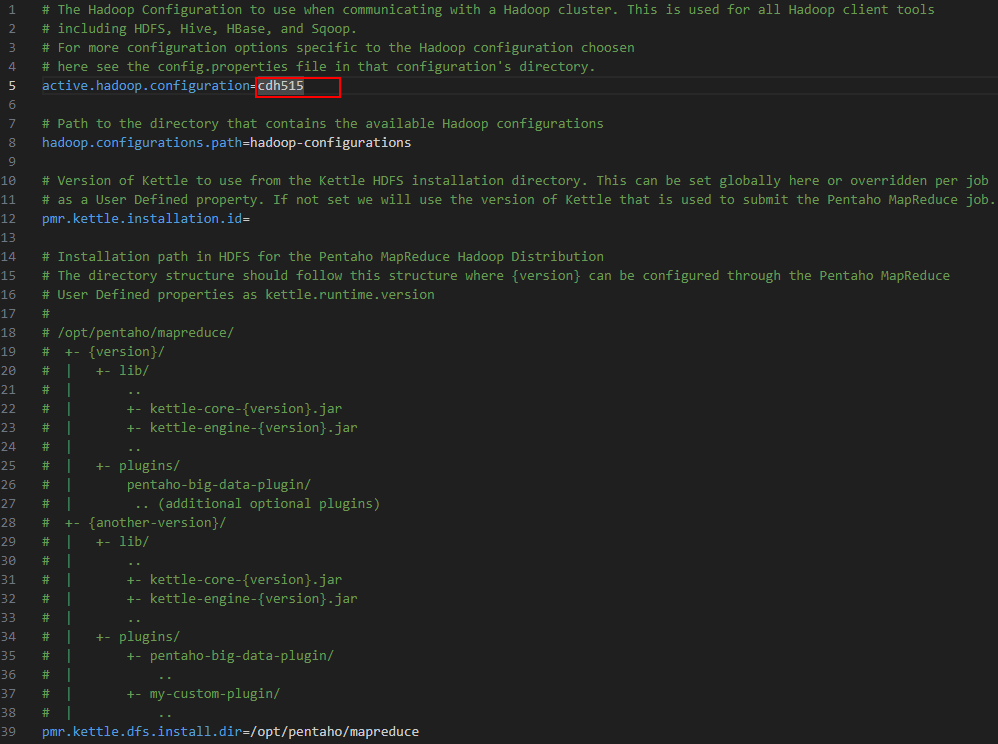

├── core-site.xml ├── hbase-default.xml ├── hbase-site.xml ├── hdfs-site.xml ├── hive-site.xml ├── mapred-site.xml └── yarn-site.xml修改${DI_INSTALL_HOME}/diserver/plugins/pentaho-big-data-plugin/plugin.properties配置文件中,active.hadoop.configuration参数值为cdh515。

cdh515即 ${DI_INSTALL_HOME}/diserver/plugins/pentaho-big-data-plugin/hadoop-configurations目录下的cdh515文件夹名称。

# 2. Kerberos适配

在${DI_INSTALL_HOME}/diserver/下新建kerberos文件夹

将kerberos.zip解压到${DI_INSTALL_HOME}/diserver/kerberos下

├── krb5.conf ├── krb5.keytab ├── config.properties修改config.properties中的principal信息

zookeeper.server.principal=zookeeper/cdh01@DWS.COM username.client.kerberos.principal=hive/cdh01@DWS.COM hbase.master.kerberos.principal=hbase/cdh01@DWS.COM hbase.regionserver.kerberos.principal=hbase/cdh01@DWS.COM

krb5.conf、krb5.keytab以及principal信息请联系对应的服务提供商获取,此处仅供参考

# 二、DolphinScheduler适配CDH515及kerberos认证

# 1. Hadoop适配

将CDH对应的服务的配置文件(hdfs-site.xml 和 core-site.xml)分别放置于worker-server/conf,api-server/conf目录下

将 Hadoop 集群下的 core-site.xml 和 hdfs-site.xml 复制到 worker-server/conf 以及 api-server/conf

# 2. Kerberos适配

将kerberos相关配置文件解压到指定目录,比如:/home/dws/install_home/krb/

分别修改master-server/conf,worker-server/conf,api-server/conf下的common.properties文件

#whether to startup kerberos hadoop.security.authentication.startup.state=true #java.security.krb5.conf path java.security.krb5.conf.path=/home/dws/install_home/krb/krb5.conf #login user from keytab username login.user.keytab.username=hdfs/cdh01@DWS.COM #login user from keytab path login.user.keytab.path=/home/dws/install_home/krb/krb5.keytab #kerberos expire time, the unit is hour kerberos.expire.time=2重启Dolphinscheduler

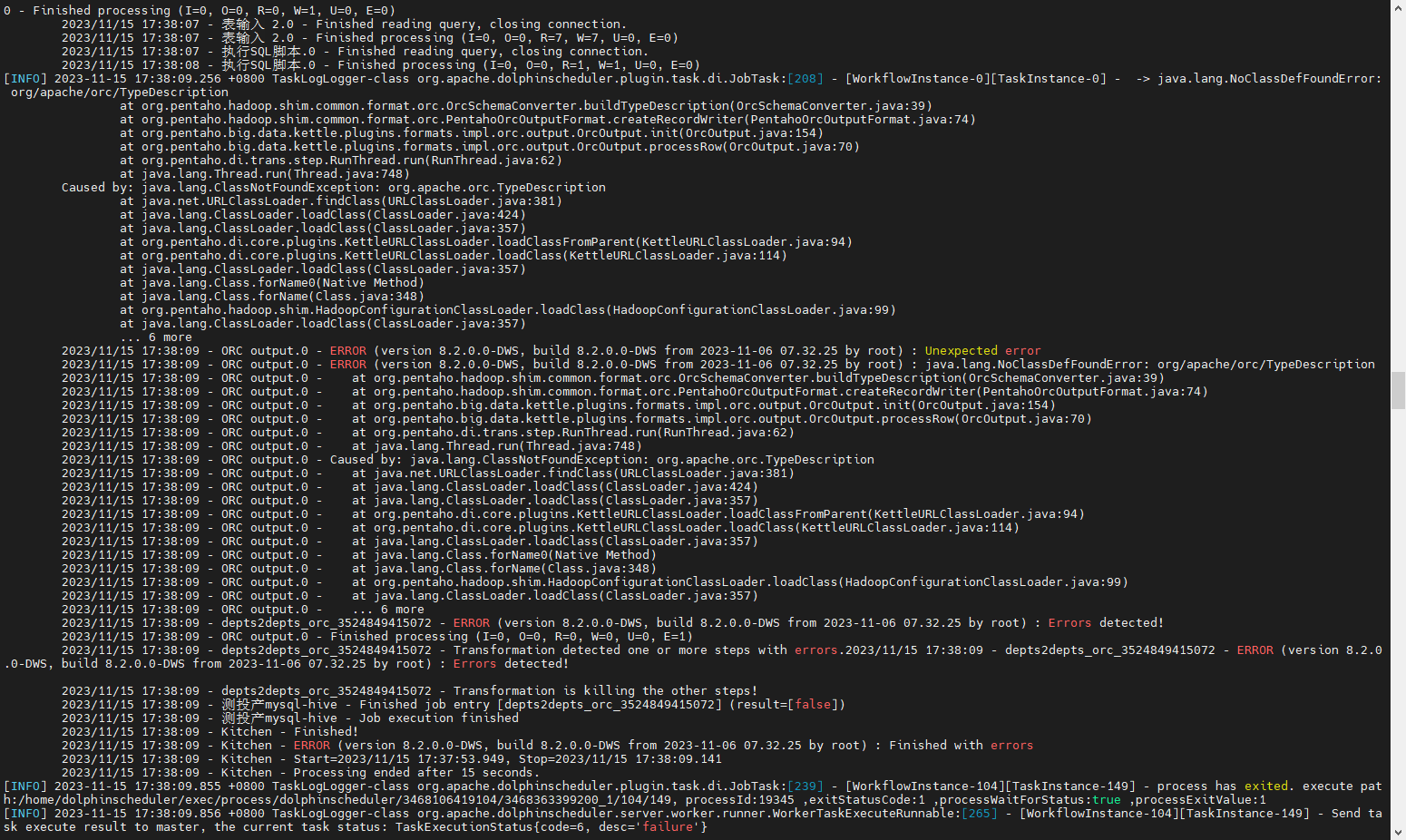

# Q: 批量作业,ORC输出 在CDH515版本上运行报错,org.apache.orc.TypeDescription 类找不到问题